Introduction

At Indeed, our mission is to help people get jobs. We connect job seekers with their next career opportunities and assist employers in finding the ideal candidates. This makes matching a fundamental problem in the products we develop.

The Ranking Models team is responsible for building Machine Learning models that drive matching between job seekers and employers. These models generate predictions that are used in the re-ranking phase of the matching pipeline serving three main use cases: ranking, bid-scaling, and score-thresholding.

The Problem

Teams within Ranking Models have been using varying decision-making frameworks for online experiments, leading to some inconsistencies in determining model rollout – some teams prioritized model performance metrics, while others focused on product metrics.

This divergence led to a critical question: Should model performance metrics or product metrics be the primary metric for success? All teams provided valid justifications for their current choices. So we decided to study this question more comprehensively.

To find an answer, we must first address two preliminary questions:

- How well does the optimization of individual models align with business goals?

- What metrics are important for modeling experiments?

🍰 We developed a parallel storyline of a dessert shop that hopefully provides more intuitions to the discussion: A dessert shop has recently been opened. It specializes in strawberry shortcakes. We are part of the team that’s responsible for strawberry purchases.

Preliminary Questions

How well does the optimization of individual models align with business goals?

🍰 How much do investments in strawberries contribute to the dessert shop’s business goals?

To begin, we will review how individual models are used within our systems and define how optimizing these models relates to the optimization of their respective components. Our goal is to assess the alignment between individual model optimization and the overarching business objectives.

Ranking

Predicted scores for ranking targets are used to calculate utility scores for re-ranking. These targets are trained to optimize binary classification tasks. As a result, optimization of individual targets may not fully align with the optimization of the utility score [1]. The performance gain from individual targets may be diluted or omitted when used in the production system.

Further, the definition of utility may not always align with the business goals. For example, utility was once defined as total expected positive application outcomes for invite-to-apply emails while the product goal was to deliver more hires (which is a subset of positive application outcomes). Such misalignment further complicates translating performance gains from individual targets towards the business goals.

In summary, optimization of ranking models is partially aligned with our business goals.

Bid-scaling

Predicted scores for bid-scaling targets determine the scaled bids: pacing bids are multiplied by the predicted scores to calculate the scaled bids. In some cases, additional business logic may be applied in the bid-scaling process. Such logic dilutes the impact of these models.

Scaled bids serve multiple functions in our system.

First, similar to ranking targets, the scaled bids are used to calculate utility scores for re-ranking. Therefore, for the same reason, the optimization of individual bid-scaling targets may not fully align with the optimization of the utility score.

Additionally, the scaled bids may be used to determine the charging price and in budget pacing algorithms. Ultimately, performance changes in individual bid-scaling targets could impact budget depletion and short-term revenue.

In summary, optimization of bid-scaling models is partially aligned with our business goals.

Score-thresholding

Predicted scores for score-thresholding targets are used as filters within the matching pipeline. The matched candidates with scores that fall outside of the pre-determined threshold are filtered out. Similarly, these targets are trained to optimize binary classification tasks. As a result, the optimization of individual targets aligns fairly well with their usage.

In some cases, however, additional business logic may be applied during the thresholding process (e.g., dynamic thresholding), which may dilute the impact from score-thresholding models.

Further, the target definition may not always align with the business goals. For example, p(Job Seeker Positive Response|Job Seeker Response) model optimizes for positive interactions from job seekers. It may not be the most effective lever to drive job-to-profile relevance. Conversely, p(Bad Match|Send) model optimizes for identifying “bad matches” based on job-to-profile relevance labeling, and it could be an effective lever to drive more relevant matches which was once a key focus for recommendation products.

In summary, optimization of score-thresholding models could be well aligned or partially aligned with our business goals.

What metrics are important for modeling experiments?

🍰 How do we assess a new strawberry supplier?

Let’s explore key metrics for evaluating online modeling experiments. Metrics are grouped into three categories:

- Model Performance: measures the performance of a ML model across various tasks

- Product: measures user interactions or business performance

- Overall Ranking Performance: measures the performance of a system on the ranking task

(You may find the mathematical definitions of model performance metrics in the Appendix.)

Normalized Entropy

Model Performance

Normalized Entropy (NE) measures the goodness of prediction for a binary classifier. In addition to predictive performance, it implicitly reflects calibration [2].

NE in isolation may not be informative enough to estimate predictive performance. For example, if a model predicts twice the value and we apply a global multiplier of 0.5 for calibration, the resulting NE will improve, although the predictive performance remains unchanged [3].

Further, when measured online, we can only calculate NE based on the matches delivered or shown to the users. It may not align with the matches the model was scored on in the re-ranking stage.

ROC-AUC

Model Performance

ROC-AUC is a good indicator of the predictive performance for a binary classifier. It’s a reliable measure for evaluating ranking quality without taking into account calibration [3].

However, as calibration is not being accounted for by ROC-AUC, we may overlook the over- or under-prediction issues when measuring model performance solely with ROC-AUC. A model that is poorly fitted may overestimate or underestimate predictions, yet still demonstrate good discrimination power. Conversely, a well-fitted model might show poor discrimination if the probabilities for presence are only slightly higher than for absence [2].

Similar to NE, when measured online, we can only calculate the ROC-AUC based on the matches delivered or shown to the users.

nDCG

Model Performance Overall Ranking Performance

nDCG measures ranking quality by accounting for the positions of relevant items. It optimizes for ranking more relevant items at higher positions. It’s a common performance metric to evaluate ranking algorithms [2].

nDCG is normally calculated using a list of items sorted by rank scores (e.g., blended utility scores). Relevance labels could be defined using various approaches, e.g., offline relevance labeling, user funnel engagement signals, etc. Note that when we use offline labelings to define relevance labels, we can additionally measure nDCG on matches in the re-ranked list that were not delivered or shown to the users.

When model performance improves against its objective function, nDCG may or may not improve. There are a few scenarios where we may observe discrepancies:

- Mismatch between model targets and relevance label (e.g., model optimizes for job applications while relevance label is based on job-to-profile fit)

- Diluted impact due to system design

- Model performance change is inconsistent across segments

Avg-Pred-to-Avg-Label

Model Performance

Avg-Pred-to-Avg-Label measures the calibration performance for a binary classifier by comparing the average predicted score to average labels, where the ideal value is 1. It provides insight into whether the mis-calibration is due to over- (when above 1) or under-prediction (when below 1).

The calibration error is measured in aggregate, which implies that the errors presented in a particular score range may be canceled out when errors are aggregated across score ranges.

The error is normalized against the baseline class probabilities, which allows us to infer the degree of mis-calibration in a relative scale (e.g., 20% over-prediction against the average label).

Calibration performance directly impacts Avg-Pred-to-Avg-Label. Predictive performance alone won’t improve it.

Average/Expected Calibration Error

Model Performance

Calibration Error is an alternative measure for calibration performance. It measures the reliability of the confidence of the score predictions. Intuitively, for class predictions, calibration means that if a model assigns a class with 90% probability, that class should appear 90% of the time.

Average Calibration Error (ACE) and Expected Calibration Error (ECE) capture the difference between the average prediction and the average label across different score bins. ACE calculates the simple average of the errors of individual score bins, while ECE calculates the weighted average of the errors weighted by the number of predictions in the score bins. ACE could over-weight bins with only a few predictions.

Both metrics measure the absolute value of the errors, and the errors are captured on a more granular level compared to Avg-Pred-to-Avg-Label. Conversely, it could be difficult to interpret over- or under-prediction issues using the absolute value. Also, these metrics are not normalized against the baseline class probabilities.

Similar to Avg-Pred-to-Avg-Label, calibration performance directly impacts Calibration Error. Predictive performance alone won’t improve it.

Job seeker positive engagement metrics

Product

Job seeker positive engagement metrics capture job seekers’ interactions with our products for the interactions that we generally consider to be implicitly positive, for example, clicking on a job post, submitting applications. The implicitness implies potential misalignments with users’ true preferences. For example, job seekers may click on a job when they see a novel job title.

When model performance improves against its objective function, job seeker positive engagement metrics may or may not improve. There are a few scenarios where we may observe discrepancies:

- Misalignment between model targets and engagement metrics (e.g., ranking model optimized for application outcomes which negatively correlates with job seeker engagements)

- Diluted impact due to system design

- Model improvement in the “less impactful” region (e.g., improvement on the ROC curve far from thresholding region)

Outcome metrics

Product

Outcome metrics measure the (expected) outcomes of job applications. The outcomes could be captured by employer interactions (e.g., employers’ feedback on the job applications, follow-ups with the candidates), survey responses (e.g., hires), or model predictions (e.g., expected hires model).

Employers’ feedback can be either implicit or explicit. When it is implicit, it again leaves room for possible misalignment with true preferences – for example, we’ve observed spammy employers who aggressively reach out to candidates regardless of their fit to the position.

Additionally, there are potential observability issues for outcome metrics when they are based on user interactions – not all post-apply interactions happen on Indeed, which could lead to two issues: bias (e.g., engagement confounded) and sparseness.

When model performance improves against its objective function, outcome metrics may or may not improve. There are a few scenarios where we may observe discrepancies:

- Misalignment between model targets and product goal (e.g., one of the ranking model optimized for application outcomes while product specifically aims to deliver more hires)

- Diluted impact due to system design

- Model performance change is inconsistent across segments (e.g., the model improved mostly in identifying the most preferred jobs, while not improving in differentiating the more preferred from the less preferred jobs, resulting in popular jobs being crowded out.)

User-provided relevance metrics

Product

User-provided relevance metrics capture match relevance based on user interactions on components that explicitly ask for feedback on relevance, for example, relevance ratings on invite-to-apply emails, dislikes on Homepage and Search.

User-provided relevance metrics often suffer from observability issues as well – feedback are optional in most scenarios and therefore sparseness and potential biases are two major drawbacks.

When model performance improves against its objective function, user-provided relevance metrics may or may not improve. For example, we may observe discrepancy when there’s misalignment between model targets and relevance metrics.

Labeling-based relevance metrics

Product Overall Ranking Performance

Labeling-based relevance metrics measure match relevance through a systematic labeling process. The labeling process may follow rule-based heuristics or leverage ML-based models.

The Relevance team at Indeed has developed a few match relevance metrics:

- LLM-based labels: match quality labels generated by model-based (LLM) processes.

- Rule-based labels: match quality labels generated by rule-based processes.

Similar to nDCG, we may also use labeling-based relevance metrics to assess overall ranking performance, e.g., GoodMatch rate@k, given the blended utility ranked lists.

When model performance improves against its objective function, labeling-based relevance metrics may or may not improve. We may observe discrepancies when there’s misalignment between model targets and relevance metrics.

Revenue

Product

Revenue measures advertisers’ spending on sponsored ads. The spending could be triggered by different user actions depending on the pricing models, e.g., clicks, applies, etc.

Short-term revenue change is often driven by bidding and budget pacing algorithms, which ultimately influence the delivery and budget depletion. Long-term revenue change is additionally driven by user satisfaction and retention.

When model performance improves against its objective function, revenue may or may not improve.

- For short-term revenue, bid-scaling models could impact delivery and ultimately budget depletion. However, the effect could be diluted due to system design, for example, when objectives for monetization have a trivial weight in the re-ranking utility formula, improvement to bid-scaling models may not have a meaningful impact on revenue..

- For long-term revenue, we expect directionally positive correlation, though discrepancies could happen, e.g., when there’s misalignment between model targets and relevance, when impact is diluted due to system design.

Evaluation Metrics for Online Modeling Experiments – Our Thoughts

🍰 Purchasing higher-quality, tastier strawberries may not always lead to more sales or happier customers. Consider a few scenarios:

- The dessert shop started to develop a new series of core products featuring chocolates as the main ingredient. It becomes more important to find strawberries that offer a good balance in taste and texture with the chocolate.

- The dessert shop started to develop a new series of fruit cakes. Strawberries are now only one of many fruits that are used.

- There’s a recent trend in gelato cakes. The dessert shops decided to introduce a few gelato cakes that use much less strawberries in them. However, gelato hype may go away, and strawberry shortcake has always been our star product.

- The dessert shop moved to a location which it’s much harder to find, losing significant regular customers.

Product Metrics vs. Model Performance Metrics

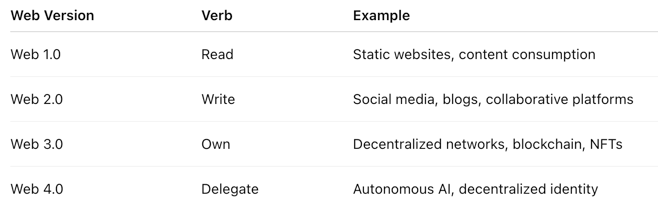

![]() Top recommendation: Improvement over product metrics and guardrail on individual model performance metrics.

Top recommendation: Improvement over product metrics and guardrail on individual model performance metrics.

As previously discussed, optimizing individual models often doesn’t directly translate to achieving business goals, and the relationship between the two can be complex. Therefore, making investment decisions based solely on improvements in model performance are likely ineffective.

- When model targets and business goals are misaligned, it’s challenging to derive product impact from model performance impact. Making decisions based on product metric improvements ensures the impact is realized.

- When the model’s contribution is diluted due to system design, it prompts investment in bigger bets or alternatively in components that allow incremental impact to be realized more effectively.

![]() Secondary recommendation: Improvement over either product metrics or overall ranking performance metrics.

Secondary recommendation: Improvement over either product metrics or overall ranking performance metrics.

Although optimizing individual models doesn’t always directly meet business goals, enhancing overall ranking performance through metrics like nDCG@k aligns better with business objectives. This approach also helps mitigate downstream dilution or biases, allowing us to concentrate on improving re-ranking performance more effectively. That said, when the downstream dilution is by design, we could be making ineffective investment decisions if simply ignoring their impact.

This approach may also be valuable when the company temporarily focuses on short-term business goals. It allows ranking to be less distracted and more focused on delivering high quality matches when products take temporary detours.

Among Product Metrics

Product metrics for experiment decision making should ultimately be driven by business goals and product strategy. We want to share a few thoughts on the usage of different types of product metrics:

User engagement metrics are relatively easy to move in short-term experiments. They are often a fair proxy for positive user feedback. However, we shall be mindful that they could have an ambiguous relationship with long-term business goals [4]. For example, clicks or applications are often considered as implicit positive feedback. However, it’s not very costly for job seekers to explore or even apply to jobs that they are not a great fit for. At the same time, exploring or applying to more jobs could be driven by bad user experiences (e.g., when they do not get satisfactory outcomes so far).

Relevance metrics, conversely, generally align well with long-term business goals [4]. Nevertheless, there are a few drawbacks:

- User-based relevance metrics could be hard to collect and measure in short-term online experiments.

- Heuristic-based metrics may not have great accuracy.

- Model-based metrics could be hard to explain and may carry inherent biases that are hard to detect.

Therefore, we may consider leveraging a combination of user engagement metrics and relevance metrics to achieve a good balance in business goal alignment, observability, and interpretability.

Lastly, revenue is a key performance indicator for the business in the long term. However, short-term revenue may have an ambiguous relationship with long-term business goals as well [4]. We may drive more clicks or applications to increase spending in the short term, but if we are not bringing satisfactory outcomes to our users, they may not continue to use our product in the future. Hence, we recommend using revenue as a success metric only when we are improving components within the bidding ecosystem, where there are short-term objectives defined for the bidding algorithms to achieve. In all other cases, we may keep revenue as a monitoring metric to prevent unintended short-term harms.

Among Model Performance Metrics

We recommend setting guardrails on individual model performance with Normalize Entropy — we don’t want to degrade either predictive performance or score calibration. In addition, monitor ROC-AUC to help with deep-dive analysis and debugging.

For bid-scaling models, we recommend we additionally monitor their calibration performance with Avg-Pred-to-Avg-Label. This allows for visibility into over- / under-predictions and scales the error to the baseline class probability.

References

- Handling Online-Offline Discrepancy in Pinterest Ads Ranking System

- Predictive Model Performance: Offline and Online Evaluations

- Practical Lessons from Predicting Clicks on Ads at Facebook

- Data-Driven Metric Development for Online Controlled Experiments: Seven Lessons Learned

- Measuring classifier performance: a coherent alternative to the area under the ROC curve

- How Well do Offline Metrics Predict Online Performance of Product Ranking Models? – Amazon Science

- Relaxed Softmax: Efficient Confidence Auto-Calibration for Safe Pedestrian Detection

Appendix

Normalized Entropy

Normalized Entropy (NE) is defined as the following [3]:![]()

where y_i is the true label, p_i is the predicted score, and p is the background average label.

Note: NE normalizes cross-entropy loss with the entropy of the background probability (average label). It’s equivalent to 1- Relative Information Gain (RIG) [2]

ROC-AUC

The Receiver Operating Characteristic (ROC) curve plots true positive rate (TPR) against the false positive rate (FPR) at each threshold setting. ROC-AUC stands for Area under the ROC Curve.

Note: Given its definition, ROC-AUC could also be interpreted as the probability that a randomly drawn member of class 0 will have a score lower than the score of a randomly drawn member of class 1 [5].

nDCG

nDCG stands for normalize Discounted Cumulative Gain. We define Discounted Cumulative Gain (DCG) at position k for a ranking list of query q_i as

Then, we normalize DCG to [0,1] for each query and define nDCG by summing the DCG values for all queries:

maxDCG is the DCG value of the ranking list obtained by sorting items in descending order of relevance [6].

Note: “query” may not be relevant in all search ranking tasks. Based on the product’s design, we may replace it with suitable groupings. For example, for the homepage, we may group on “feed.”

Avg-Pred-to-Avg-Label

where y_i is the true label, p_i is the predicted score.

Note: The percentage change in this value may not be fully informative since the ideal value is 1. To use it for experimental measurements, we may consider taking the Abs(actual – 1) or establishing alternative decision boundaries.

Average/Expected Calibration Error

Average Calibration Error and Expected Calibration Error are defined as the following [7]:

where M+ is the number of non-empty bins, S_m is the average score for bin m, A_m is the average label for bin m.

where M+ is the number of non-empty bins, S_m is the average score for bin m, A_m is the average label for bin m.Note:

- Average calibration error is a simple average of calibration error across different score range bins

- Expected calibration error is the weighted average of calibration error across different score range bins, weighted by the number of examples in the bin