Have you ever been embarrassed by the first iteration of one of your machine learning projects, where you didn’t include obvious and important features? In the practical hustle and bustle of trying to build models, we can often forget about the observation step in the scientific method and jump straight to hypothesis testing.

Data scientists and their models can benefit greatly from qualitative methods. Without doing qualitative research, data scientists risk making assumptions about how users behave. These assumptions could lead to:

methods. Without doing qualitative research, data scientists risk making assumptions about how users behave. These assumptions could lead to:

- neglecting critical parameters,

- missing a vital opportunity to empathize with those using our products, or

- misinterpreting data.

In this post, we’ll explore how qualitative methods can help all data scientists build better models, using a case study of Indeed’s new lead routing machine learning model, which ultimately generated several million dollars in revenue.

What are qualitative methods and how are they different from quantitative methods?

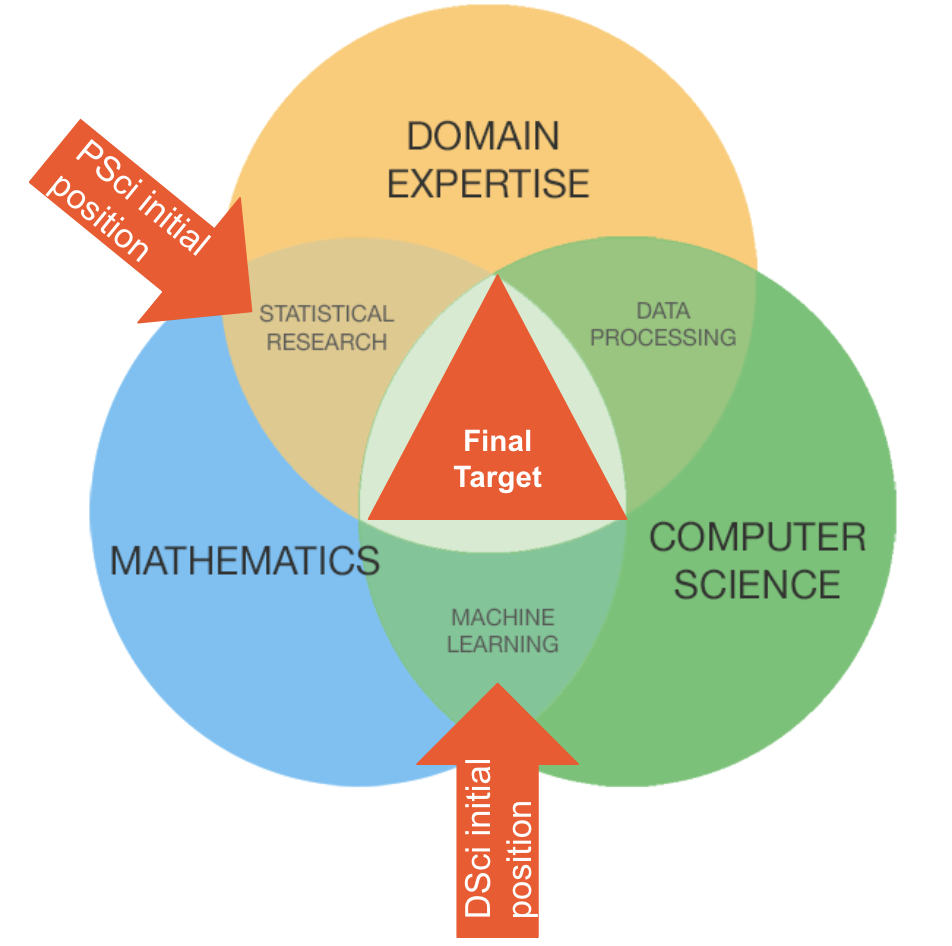

Few data scientists are formally trained in qualitative methods. They’re more deeply familiar with quantitative methods like A/B testing, surveys, and regressions. Quantitative methods are great for answering questions like “How much does the average small business spend on a job posting?”, “What are the skills that make someone a data scientist?”, or even “How many licks does it take to get to the center of a Tootsie roll pop?” (The answer is 3. Three licks.)

But there are some questions that quantitative methods can’t answer, such as “Why do account executives reach out to this lead instead of that lead?” or “How do small businesses make the decision to sponsor or not sponsor a job?” Or the truly deep question: “Why do you want to get to the center of the Tootsie roll pop?”

To answer these questions, qualitative researchers rely on methods like in-depth interviews, participant observation, content analysis and usability studies. These methods involve more direct contact with who and what you’re studying. They allow you to better understand how and why people do what they do, and what kinds of meaning they ascribe to different behaviors.

Put another way, quantitative methods can tell you the what, the how much, or how often; qualitative methods can tell you the why or the how.

Cartoon created by Indeed UX Research Manager Dave Yeats using cmx.io

Why should you use qualitative methods? A case study in Lead Generation

Our Lead Generation team recently benefited greatly from the use of qualitative methods. When an employer posts a job, it represents a revenue opportunity for Indeed. We route that employer to an account executive, who then reaches out and helps the employer set an advertising budget to sponsor their job. This increases the job’s visibility and therefore the velocity at which they make a successful hire. Employers who have not yet spent with us on Indeed are referred to as “leads.”

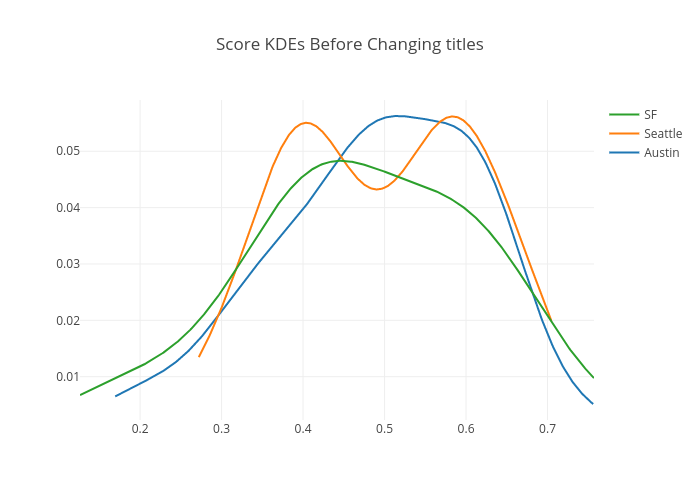

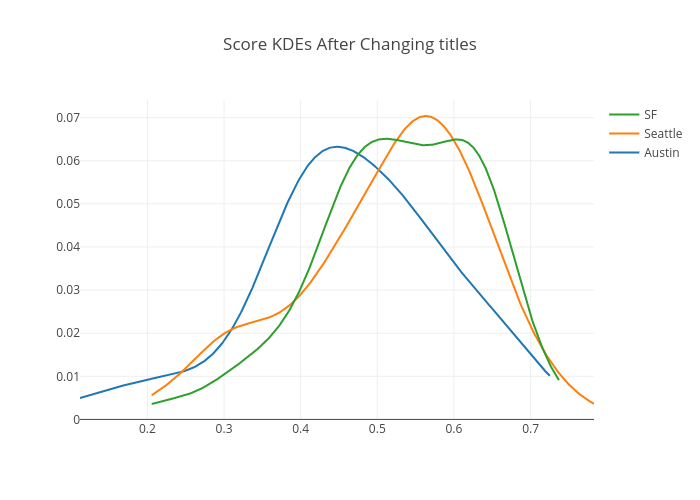

Some leads are better than others, however. We wanted to be able to give leads a score on a scale from one to five stars that would indicate our best estimate for whether or not they would spend. Our Product Science team decided to build a machine learning model that would score leads and route them more effectively. But where to start? Prior to this project, we had little experience with lead scoring and little intuition about what a good lead would look like. How could we even know what features should be in our model?

To answer that question, we turned to people with the most hands-on experience with leads: account executives themselves. Not only are they experts on what makes a good lead, they would also be the beneficiaries of our efforts. We took a three-pronged qualitative approach:

- Observation. To learn about the day-to-day sales experience, each member of our team shadowed different reps and listened to them on sales calls. We observed how they would select which lead to call, how they would decide what to talk about on the call, and how they actually made deals.

- Interviews. We sat down with several sales managers and representatives across the company and asked them questions about leads they had previously decided to call or drop, like “How do you pick which leads to call first?” or “Why did you decide to drop this lead?”

- Content Analysis. We combed through thousands of open-ended responses to a company-wide survey of account executives to better understand their pain points with regards to leads.

We learned a lot! Just by doing three simple qualitative studies for a few hours, we collected a long list of potential features. Had we not sat down next to members of the sales team and observed as they worked, we would have never obtained these insights. Our next step was to start digging into the data and validating how generalizable the findings from reps were.

With the intuition we gained from our qualitative studies on account executives’ behaviors and thought processes, we ultimately built a machine learning model that generated millions of dollars in annual incremental revenue. And we didn’t stop there: we kept interviewing and shadowing reps to get their feedback on the model. We built a new version that generated a additional annual incremental revenue. And we made sure to market our new model so people knew about it.

In short, these qualitative studies kept us grounded and built empathy with our end users. Without qualitative studies, the models we built would have been out of touch with reality and made it harder for us to address our users’ needs. With qualitative methods, we infused our models with intuition and working hypotheses that we could later verify with quantitative data.

Where to start learning the basics for qualitative methods

In the case study above, our end users were our coworkers here at Indeed. It’s worth noting that it’s not always as simple to conduct qualitative studies with external users. Here at Indeed, we have a fantastic UX Qualitative Research team to turn to for these kinds of studies. We encourage you to reach out to such teams at your own companies, and if they don’t exist yet, create them. Work with them. Shadow them. Buy them a beer. They are wonderful!

But don’t just stop there. Below are some of our favorite readings and resources on qualitative methods, recommended by former academics here at Indeed.

- “When to Use Which User-Experience Research Methods” — A great article by the Nielsen Norman group on identifying the right method for the research question at hand.

- Learning from Strangers— A classic guide on how to ask questions in an in-depth interview.

- “How to Conduct User Interviews” — A shorter guide geared toward industry and product development.

- “5 Steps to Create Good User Interview Questions” — A great Medium post on avoiding biased or leading questions in in-depth interviews.

- Writing Ethnographic Field Notes— The seminal work on how to collect details during observational studies. Geared toward anthropological ethnographies, but with a lot of great tips for being more aware of details in day-to-day interactions as well.

- Salsa Dancing in the Social Sciences— While arguably one of the weirdest book titles, this is an enjoyable and approachable overview of the benefits of qualitative methods.

- Don’t Make Me Think — Steve Krug focuses primarily on usability, but his book offers good tips for observing how people interact with websites.

If you are passionate about methods and data science, check out product science and data science jobs at Indeed!

How Qualitative Methods Support Better Data Science—cross-posted on Medium.